Advances in Virtual Reality – Part 1

Did you have a View-Master when you were young? Do you want to try an Oculus Rift or HTC Vive headset? These virtual reality devices show how far the technology has come. We haven’t reached the promise of a fully immersive world, as conveyed in movies such as Tron, Lawnmower Man, The Matrix, and Inception, and of course Star Trek’s holodeck, but we are getting closer. Many believe that virtual reality is on the verge of a popular breakthrough.

As a human factors engineer, I find the current state of virtual reality, exemplified by products such as Oculus Rift and HTC Vive, to be very exciting and full of potential. In this article, I’ll introduce some of the technologies behind these products. Subsequent articles will look at virtual and augmented reality applications in entertainment, industry, and medicine, as well as at the future of virtual reality, focusing on how virtual reality is changing the paradigm of user interface design. For a user interface specialist like myself, this in an exhilarating time.

Virtual and Augmented Reality

Two technologies are actually on the verge of a breakthrough. The first is virtual reality (VR), which “replicates an environment that simulates a physical presence in places in the real world or an imagined world, allowing the user to interact in that world. Virtual realities artificially create sensory experiences, which can include sight, touch, hearing, and smell” (Virtual reality, 2016). Objects in the virtual world appear three dimensional and life sized, and are viewed from the perspective of the user as he or she moves among them.

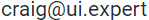

Augmented reality (AR) is related, but instead overlays data on top the real world. Google’s seemingly ill-fated Glass is an example of augmented reality, and provides an interesting example of technology getting ahead of social norms. That said, other forms of AR may be quite successful (and Google Glass may still succeed in the domain of business applications. Heads up displays are an example of augmented reality, and have been used in aircraft for years and, in a more limited basis, in automobiles as well. Here’s an example from Mercedes-Benz, showing a close-up of the driver’s view.

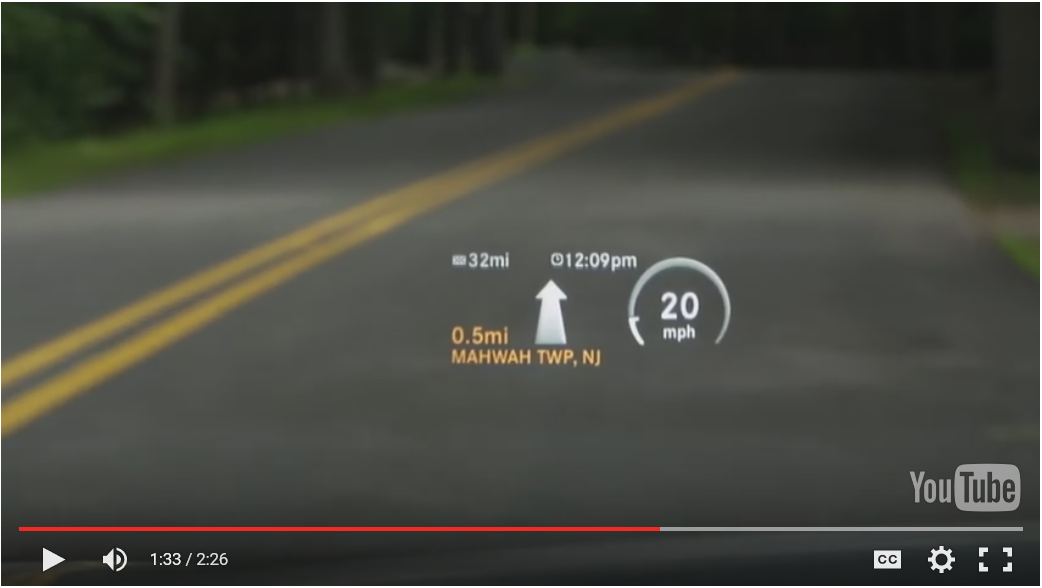

Smartphones, with their camera, screen, and GPS capabilities offer augmented reality capabilities as well. One example is Golfscape GPS Rangefinder, which shows important distances and course information.

You may already see the potential that virtual and augmented reality offer for user interfaces, as they allow us to interact with virtual worlds or the real world free of the constraints of a mouse, touch screen, and keyboard. Interactions become seamless and quite natural as they can be designed to mimic the interface paradigms of interacting with real physical objects. The interface responds to the movements of our head, or even our entire body. Turn you head to the right and you see what’s to the right, meaning you “scroll” or “pan” simply by the act of looking. Move closer to an object and it gets larger. Sounds are accurately placed; a sound that is behind the user sounds like it is actually behind the user and with the right hardware, touched objects can provide tactile feedback.

VR began very simply. The View-Master, introduced in 1939, simply provided a stereoscopic view of a still photo. Flight and driving simulators have created limited but increasingly realistic virtual worlds. Online multi-player games and environments such as Warcraft and Second Life allow players to exist in low fidelity virtual worlds. In the 1990s, the gaming industry introduced products such as SEGA VR glasses and Nintendo Virtual Boy. Many thought that this would be the breakthrough in VR, but these products were not successful in the market. The Nintendo Virtual Boy, for example, was faulted for its high price and because it was uncomfortable to play (Virtual Boy, 2016).

The Technologies

So why are people now talking about a breakthrough in VR and AR? Because the technologies that enable VR and AR have improved so much. Graphics are faster sharper, screens are lighter and more comfortable, and user movements can be faster and more accurately tracked.

It’s not news that graphics are getting sharper. We see this in high-definition television (and now ultra-high-definition 8K television), video game graphics, and realistic special effects in movies. Our ability to generate and display high quality graphics continues to astound and is key to successful VR and AR implementations. The better the graphic implementation and the wider the field of view that can be displayed to the user, the more compelling and immersive the virtual world becomes.

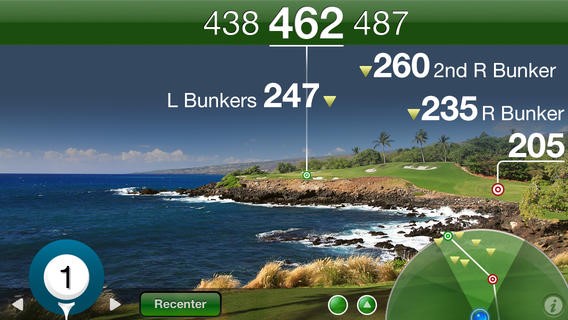

The head mounted hardware is getting better as well. Most virtual reality is viewed through a headset, as this is a relatively inexpensive, portable, and practical way to provide immersive video for the participant. VR headsets display stereoscopic computer graphics and video, play audio, and track head (and potentially eye) movements. These relatively advanced head mounted displays finally appear to be hitting the mainstream. One simpler viewer (not really a headset) is Google Cardboard, which holds a smartphone and provides a stereoscopic view – basically a 21st-century View-Master. Over 1000 Cardboard-applications are available.

Other companies have announced or rolled out more sophisticated virtual and augmented reality headsets. Examples include Facebook’s Oculus Rift, Sony Playstation VR, HTC Vive, Samsung Gear VR, Microsoft HoloLens, but there are many more.

HoloLens is an augmented reality headset; it projects virtual images, visible only to the wearer, into the real world. Microsoft’s web site shows many examples of how HoloLens can be useful such as displaying virtual instructions to someone fixing a plumbing problem or allowing an engineering team to view their design changes superimposed over an actual product. HoloLens offers an innovative user interfaces as well – for example, hand motions that control virtual objects can also be superimposed on the real world.

Advanced tracking capabilities are the third factor behind the impending breakthrough of VR and AR. Imagine a way you interact with the world around you, and likely someone is trying to enable that interaction in the virtual world. I’ve already mentioned head and eye tracking in a headset, allowing us to see what’s to the right in our virtual or augmented world simply by looking that way. Yet VR and AR can track more than our head and eyes. Full-body motion sensing technology has become mainstream in gaming; examples include Microsoft Kinect, Nintendo Wii. Hardware such as that produced by PrioVR also tracks full body movements. Other hardware focuses on tracking hand movements. Haptic gloves are a special type of glove that provides force feedback—remember, the world is interacting with us as well. Hands Omni gloves are one example; these gloves use small inflatable air bladders to provide feedback.

Researchers are also looking at biosensors that detect nerve and muscle activity directly, or to provide haptic feedback by affecting muscle tension. Down the road, perhaps we will be able to effectively control a virtual environment by direct monitoring of our brainwaves. You think it and it happens.

All of these possibilities break user interfaces wide open and allow interaction designers and human factors engineers to move far beyond the mouse and keyboard. In the next article we’ll take a closer look at some of these interfaces in virtual reality applications that are imminent or currently in use, including HoloLens and Oculus.

References

Wikipedia. (2016, February 28). Virtual Boy. Retrieved February 28, 2016, from Wikipedia: https://en.wikipedia.org/wiki/Virtual_Boy#Reception

Wikipedia. (2016, March 8). Virtual reality. Retrieved from Wikipedia: https://en.wikipedia.org/wiki/Virtual_reality

Dr. Craig Rosenberg is an entrepreneur, human factors engineer, computer scientist, and expert witness. You can learn more about Dr. Rosenberg and his expert witness consulting business at www.ui.expert